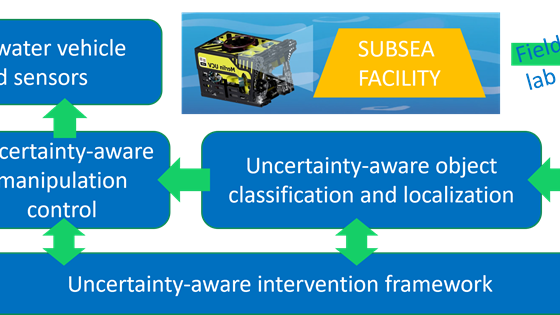

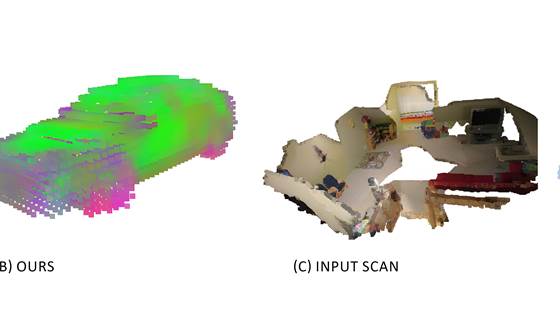

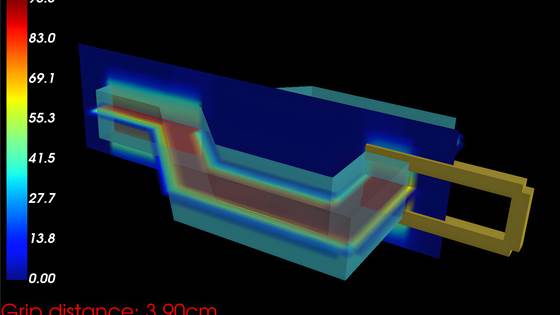

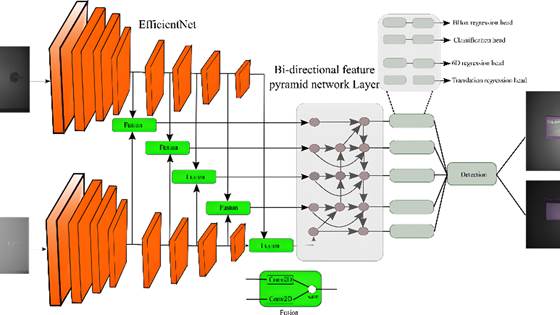

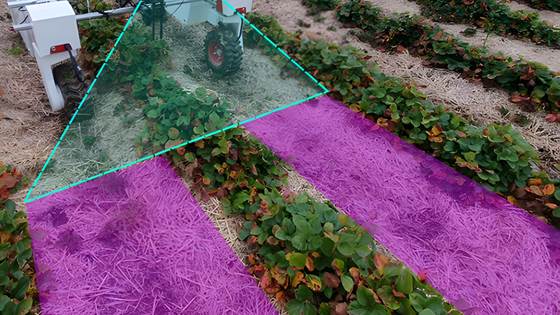

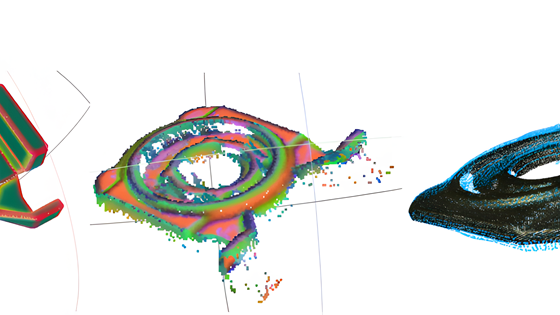

Our research focuses on designing, developing, and training networks for a wide range of 2D and 3D vision applications, such as object detection, inspection, semantic segmentation, pose estimation, classification, prediction, and anomaly detection. We also emphasize explainable deep learning models through the use of physics-aware deep learning models and uncertainty estimation, which ensures that these models can be safely deployed in autonomous systems and other high-risk applications.

We collaborate closely with our customers to integrate domain-specific knowledge into their deep learning models, which helps to produce robust solutions, reduce model size, enhance explainability, and improve AI performance.

A successful deep learning-based system relies on being trained on a large and representative dataset which can be costly to acquire. Our group exploit data-efficient learning methods to lower the cost of acquiring labeled data needed to train deep learning models, including the use of simulated labeled datasets and semi- and self-supervised learning techniques to leverage unlabeled data.

Our expertise

- Deep learning on 3D data (points-clouds), 2D image, and video data for various applications.

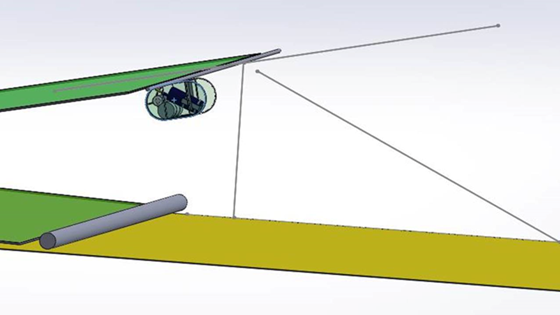

- Deep learning for inspection and situational awareness in autonomous systems such as robots and drones.

- Data efficient learning (techniques reducing the required labeled data).

- Improve explainability of the deep learning models through physics aware modeling and uncertainty estimation.

- Efficient deep-learning models running on embedded processors.