RGB-D scanning and reconstruction have advanced significantly in recent years, thanks to the growing availability of commodity range sensors like the Zivid, Microsoft Kinect, and Intel RealSense. State-of-the-art 3D reconstruction techniques can now capture and recreate real-world objects with astonishing accuracy, spawning a slew of possible applications including manufacturing artifact detection, content production, and augmented reality. Due to geometric incompleteness, noise, and uneven shrinkage and deformation during the production process, such breakthroughs in 3D scan reconstruction have remained limited to certain usage situations. In particular, there is a notable limitation in matching 3D scans to clean, sharp 3D models for quality control.

Supervised training of deep neural networks requires large amounts of labeled data. This complicates their application to domains where training data is scarce and/or the process of collecting new datasets is laborious and expensive. Therefore, we aim to train the deep neural network in a self-supervised fashion. Self-Supervision is a form of unsupervised learning where the data provides the supervision. The objective is to pre-train deep neural networks using pretext tasks that don't require costly manual annotations and can be created automatically from data. In general, withhold some data and give the network the task of predicting it. The pretext task describes a proxy loss, and in order to solve it, the network is forced to learn what we truly care about, such as a semantic representation. Networks can be deployed to a target task utilizing only a little quantity of labeled data once they have been pre-trained.

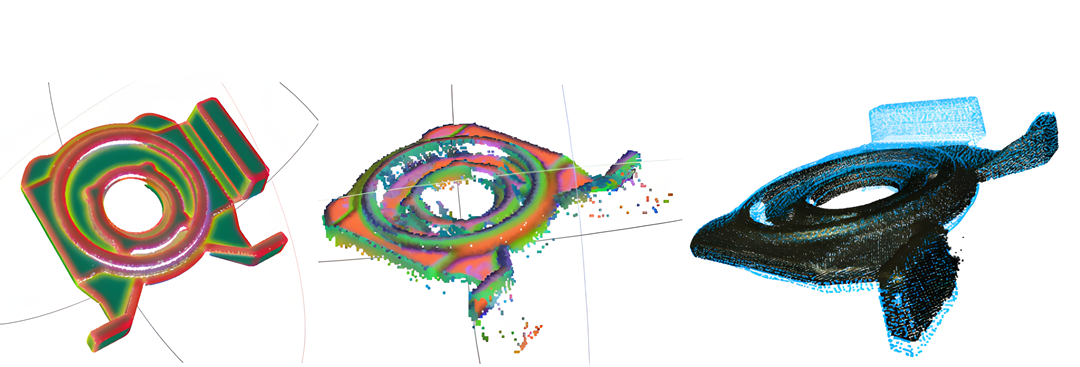

Compared to earlier works, our CAD-Scan registration approach is robust to deformation caused by the solidification process, differences between CAD and scan, and relies on global registration unlike ICP. Sample Figure is shown below.

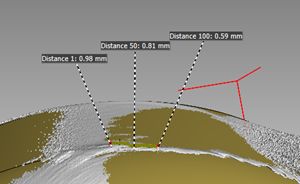

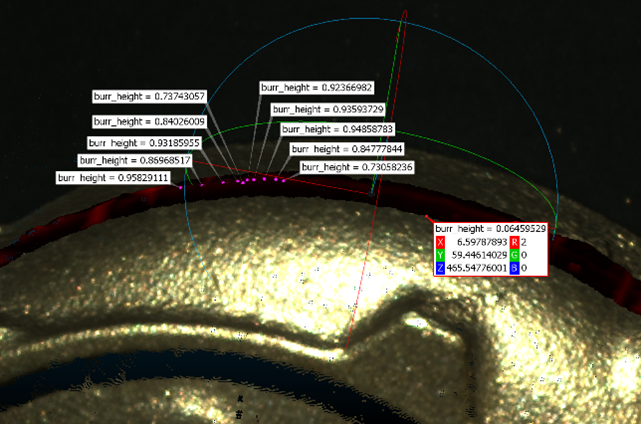

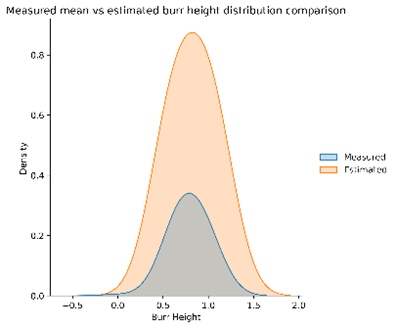

Although quantitative evaluation on the Zivid scans serves the purpose of quantifying the burr quantization algorithm, it is important to compare the estimated burr size with a high resolution industrial burr size measuring device. An industrial computed tomography (CT) scanning machine (Zeiss Metrotom 1500) is used to scan the workpiece and produce a volumetric representation of the scanned object. Volume Graphics Software was used to analyze the scans, register the parts, and measure the burr heigh. The figure below compares our approach to estimate burr height with industrial CT scanning machine.

|

|

Figure 2: Sample points on the surface of workpiece as measured with CT scanning machine (Left Image) and burr estimation using our approach on the left.

|

|

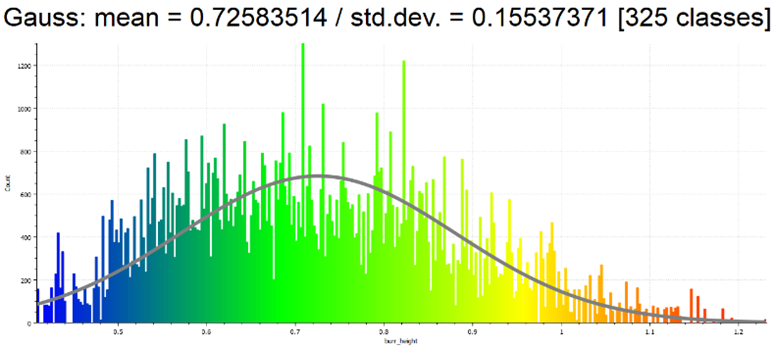

Figure 3: Estimated burr height distribution comparison for burr points. Burr height distribution for sample region from CT scanning (Left image) and our approach vs CT scanning measured burr height in the same plot (Right image).

Paper ref: Ahmed Mohammed, Johannes Kvam, Ingrid Onstein, Marianne Bakken, Helene Schulerud: Automated 3D burr detection in cast manufacturing using sparse convolutional neural networks: Robotics and Computer-Integrated Manufacturing.