Deep neural networks have the power to be game changing for cost-effective visual navigation solutions, but the gap between controlled data science research and deployment in real applications is still a hindrance for further adoption. In this work, we address several issues that are commonly faced when training neural networks for practical applications: how to efficiently acquire training labels, how to inspect which features the network has learned, and how to ensure robustness against dataset bias. We demonstrate our methods through field trials with the Thorvald agri-robot in three scientific papers.

End-to-end learning

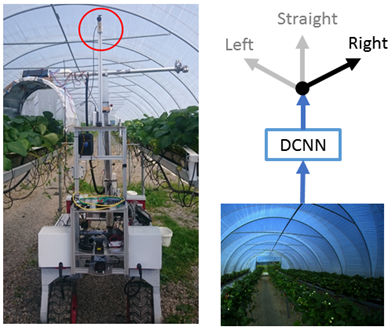

In this work, we develop a new Deep learning method for autonomous robot row following in a strawberry polytunnel based on a single wide-field-of-view camera (See Figure 1) We explore an end-to-end learning approach which enables efficient data capture and labelling. A neural network is trained on images from a crop row drive, automatically labelled with different views extracted from a wide field-of-view camera. The method shows good classification results (99,5 % accuracy), but the black-box method is hard to debug or adjust in practical applications.

Paper: 'End-to-end Learning for Autonomous Crop Row-following’, IFAC 2019

Robot-supervised learning

In this work, we present a robot-supervised learning approach. This provides the best of both worlds in terms of learning approaches: a less black-box solution than end-to-end learning, with automatic data capture and label extraction. This is done by driving a teacher robot (See Figure 2) equipped with GNSS antennas along a GNSS annotated crop row, such that labels for crop row segmentation can be automatically projected into the camera image. This is used to train a neural network that can be used to detect the crop rows and steer the robot along the crop row only using a camera.

Paper: "Robot-supervised Learning of Crop Row Segmentation", ICRA 2021

Hybrid learning

Marianne Bakken, Richard J. D. Moore, Johannes Kvam and Pål J. From. "Applied learning for row-following with agri-robots" (Submitted to Journal of Field Robotics)

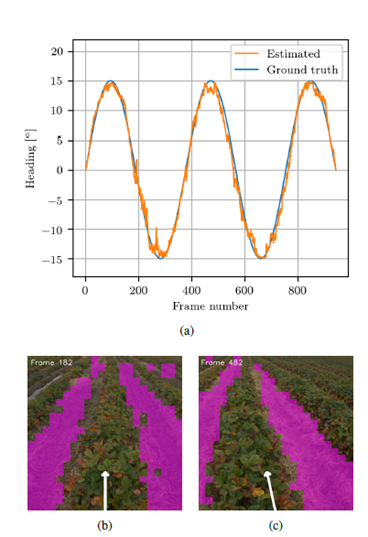

In this paper, we combine the robot-supervised learning and end-to-end learning approaches into a hybrid learning approach. We design a light-weight neural networks architecture for row following that outputs heading angle directly, but also shows the predicted crop row segmentation to increase explainability and robustness to dataset bias. We achieve good results on a test dataset (0.9 degrees mean average error in heading angle) and demonstrate row-following in open-loop field trials with an agri-robot in a strawberry field. See Figure 3 for example results.

Figure 3 Results from open-loop field trials. Top: Estimated (orange) vs. ground truth (blue) heading angle.Bottom: Example frames showing estimated steering angle and lane segmentation.

Paper submitted to Journal of Field Robotics, under review.

The figure above: Teacher robot equipped with GNSS antennas and camera. Crop row labels are automatically projected into the camera image and used to train a neural network.