Intervention tasks on subsea installments require precise detection and localization of objects of interest. This problem is referred to as “pose estimation”

Underwater optical (3D) imaging has opened possibilities for providing high density information of the Autonomous Underwater Vehicle (AUV) surroundings. Accurate and robust 6-DoF localization of relevant objects in relation to the AUVs robotic arm is a key enabler for autonomous intervention, e.g. turning of valves, picking up objects etc.

The performance of 6DoF localization has significantly improved with the advent of deep learning – especially in terrestrial application. Our research has focused on how 6-DoF DL methods can be adapted to images acquired in the underwater environment while retaining their superior performance. One of the main challenges is that the visual appearance of the same object will vary with the turbidity. The higher turbidity, the more noise and less contrast we will observe in the images. To reliably train such DL networks requires large annotated datasets which can be costly to generate.

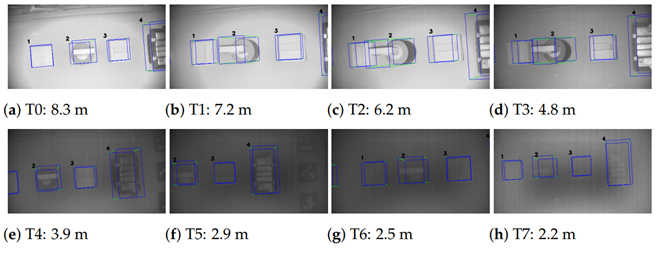

One approach which we have developed (and published in [1]), is a way of generating a dataset for 6-DoF localization with automated 6-DoF labeling even under turbid conditions. We created a mockup subsea panel which contained objects such as valves, gauges and fish-tails. A number of Aruco markers were placed around the panel (both above and below the water). Two cameras were rigidly attached to each other, where one camera was located underwater while the other (an underwater 3D camera) was located above water. Since the positions of the Aruco markers in relation to the objects of interest were well calibrated, we could use the Aruco detections above water to annotate the 6-DoF localization of the objects in the underwater camera even under very turbid conditions. The following figure shows the annotated detections overlaid on the intensity image across turbidities. We have measured the turbidities by way of attenuation lengths ranging from 8.3m to 2.2m.

Figure 1: Subsea panel with projected ground truth pose as seen across turbidities. Images were acquired under seven different turbidites, ranging from 8.3 m down to 2.2 m attenuation length.

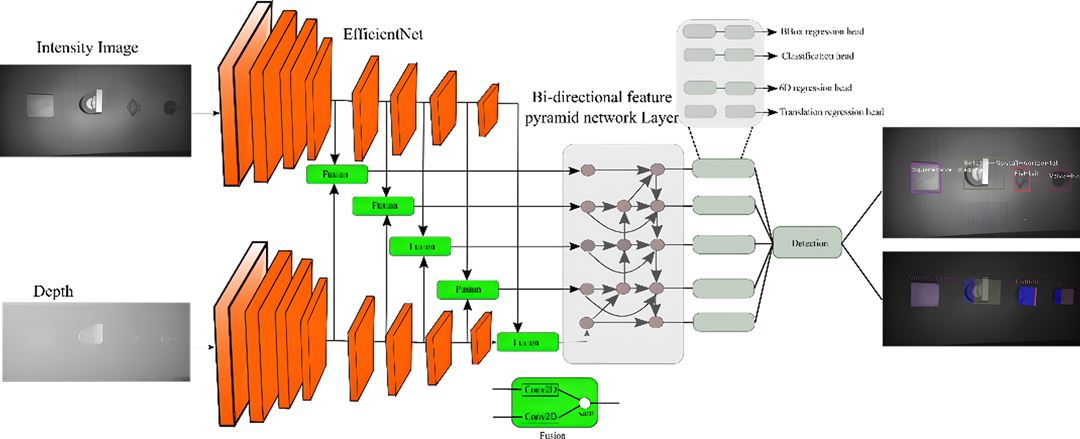

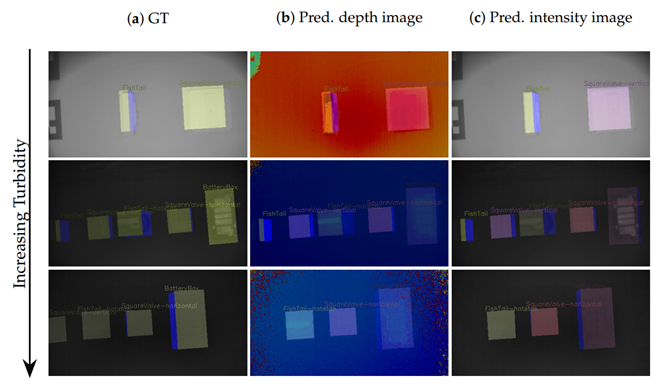

We also developed and trained a DL pipeline to predict the 6-DoF of the annotated objects. The DL network includes 4 sub-tasks that combined solve the task of object 6D pose estimation. Class and box prediction sub-networks handle detecting objects with 3D data while handling multiple object categories and instances. In Figure 1 we show diagrammatically how the network is designed, while Figure 2 shows some prediction results from the network across turbidities.

projected on the depth and intensity image, respectively. The top, middle and last row shows ground truth and predicted pose result for turbidities 1, 3 and 6. The last row shows mis-detection (i.e.,

four object in GT and three detected objects in the prediction) under partial occlusion and low depth resolution.

[1] Mohammed, Ahmed, Johannes Kvam, Jens T. Thielemann, Karl H. Haugholt, and Petter Risholm. 2021. "6D Pose Estimation for Subsea Intervention in Turbid Waters" Electronics 10, no. 19: 2369. https://doi.org/10.3390/electronics10192369.