The recent advancements in the field of 3D data acquisition have led to an increase in the use of point clouds. Point clouds are collection of data points in 3D space, typically representing the surface of an object or a scene. In recent years, supervised deep learning techniques has shown remarkable results in point cloud analysis. However, supervised methods require labeled data, which can be difficult and expensive to obtain. Self-supervised learning has emerged as a solution for this problem, as it leverages unlabeled data to learn useful representations. In this paper, we propose a self-supervised method for point cloud representation learning that enforces a point-based and instance-based self-supervision training strategy, that can serve as a general pretraining step. Our method, called Semantic View Consistency (SVC), uses a suitable self-supervision pre-text task for both encoder and decoder, to obtain geometrically relevant representation.

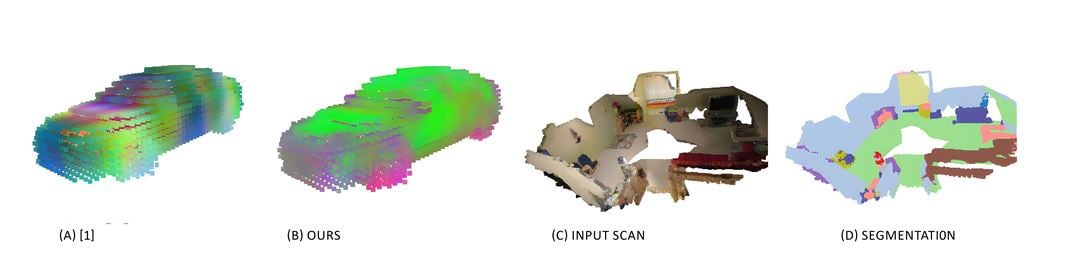

We evaluate our method on two public benchmark datasets ShapeNet and ScanNet, and compare it with state-of-the-art self-supervised methods [1,2]. The experimental results show that pre-trained SVC achieves 66% accuracy on Shapenet without finetuning. Furthermore, we visualize the learned representations using t-SNE and show that the representations are semantically meaningful (Fig 1.a, 1.b). In addition, we demonstrate that the learned representations are transferable to downstream tasks, such as semantic segmentation (Fig 1.c, 1.d). Finally, we conduct an ablation study to analyze the impact of different components of SVC and show that enforcing semantic consistency significantly improves the performance of the method. Overall, our proposed SVC method offers a promising solution for self-supervised point cloud representation learning, and has the potential to be applied to a wide range of 3D point cloud analysis tasks.

[1] Saining Xie, Jiatao Gu, Demi Guo, Charles R Qi, Leonidas Guibas, and Or Litany. Pointcontrast: Unsupervised pre-training for 3d point cloud understanding. ICCV, Springer, 2020, pp. 574–591

[2] Christopher Choy, Jaesik Park, and Vladlen Koltun. Fully convolutional geometric features. In Proceedings of the IEEE/CVF ICCV, 2019b, pp. 8958–8966.

Fig. above: (a) and (b): comparison of learned features on ShapeNet data, fig (c) and (d): evaluation on down stream-task for semantic segmentation.