2019: Learning from Data

Abstract

The availability of ever more computing power and data has led to an enormous development in methods related to data in the recent years. In this winter school, we will look at methods for using the knowledge in data to improve our computer algorithms and look at the interplay between these. The school will focus around the following topics and try to connect the dots between these interrelated fields:

The availability of ever more computing power and data has led to an enormous development in methods related to data in the recent years. In this winter school, we will look at methods for using the knowledge in data to improve our computer algorithms and look at the interplay between these. The school will focus around the following topics and try to connect the dots between these interrelated fields:

• Data assimilation

• Inverse modeling and parameter estimation

• Uncertainty quantification

• Value of information

• Machine learning and artificial intelligence

The school will consist of lectures and interactive sessions and will include a poster session where participants can share their own work with each other. Examples will be given for several different application areas, and the techniques and ideas are applicable to a wide range of fields in eScience.

The fields can be summarized as follows. Data assimilation brings real-world knowledge, such as a measurement, into our computer models, and inverse modeling tries to estimate state which cause the observations we see. Uncertainty quantification tells us how good our models are, where they can be trusted, and perhaps more importantly where they are quite uncertain. To decrease the uncertainty of a model, we can e.g., take more measurements close to where our model is uncertain. This brings in the concept of value of information: what is the value of information fed into our computer models, and what information is most valuable? Finally, machine learning and artificial intelligence is at the extreme end of data-based algorithms which rely solely on the data we observe.

Data assimilation is the process of getting data into our models and making our computer models fit with what we observe. Weather prediction systems use this routinely by assimilating temperature and pressure to adjust the computer model of the atmosphere. These techniques include methods such as Ensemble Kalman filtering, 3DVAR, 4D-var, nonlinear particle filters, etc. Highly related to data assimilation is the process of inverse modeling and parameter estimation. Based on a set of measurements, we want to create a hypothesis for what the state is that caused this observation.

Uncertainty quantification tries to answer the question howuncertain our model is, and specifically when and where it is most uncertain. The Lorentz attractor, for example, will have high uncertainties: After a given amount of time, two points arbitrarily close to each other will have drifted arbitrarily far apart. However, for sufficiently short timespans, the two points will remain close and follow roughly the same trajectory. Determining how accurate a result is, how far into the future our models can predict, and where our models can be trusted is essential knowledge.

Value of information addresses the issue of uncertainties in a model, and how these can be reduced through gathering extra information. However, all information comes at a cost – either computationally, economically, or in other ways. A key question is then what data gives the most value for the least cost? At one end of the scale, it may not be very valuable to have many measurement points which do not reduce the uncertainties in your model. At the other end, a single measurement of the right kind can even remove the illposedness of your model and make the solution unique.

Machine learning and artificial intelligence is a prime example which has undergone a revolution in the last decade. These methods are so efficient that computers are faster and more accurate than humans when determining the breed of a dog on a photograph. At the same time, it is evident that these methods have their shortcomings. A machine learning algorithm can be trained to classify wolf and Alaskan husky (a dog breed) based on photographs. A set of pictures of wolves are fed to the computer to teach it what a wolf looks like, and similarly for the husky. This is called training data, and when confronted with a previously unseen photograph, the computer uses the knowledge it extracted from all the images in the training data to classify as either one or the other. However, we don't really know what knowledge the computer extracted from the initial training data. If the background is winter on all wolf images, and grass on Husky images, we may as well have trained it to classify "snow" versus "grass". (Original source: https://arxiv.org/abs/1602.04938)

Program

Julien Brajard

Machine learning, and more specifically deep learning, has demonstrated impressive skill, over the last decade, over a varied range of tasks, such as playing game or recognize pictures. This incontestable proof of machine learning performance methods over these diverse tasks hints at their potential to improve the accuracy and/or speed of physical modelling, such as climate models. The objective of these lectures is to give a broad overview of machine learning techniques focused on the potential application to physical modelling. During the week, we will cover the following topics:

1) An overview of the most used machine learning techniques.

2) Presentation of some essential concepts for applying machine learning.

3) Details on few machine learning algorithms and deep-learning.

4) Illustrations of the use of machine learning to the physical modelling.

Useful References:

Goodfellow, Ian, et al. Deep learning. Vol. 1. Cambridge: MIT

press, 2016.

https://www.coursera.org/learn/machine-learning

Jo Eidsvik

We constantly use information to make decisions about utilizing and managing resources. For natural resources, there are often complex decision situations and variable interactions, involving spatial (or spatio-temporal) modeling. How can we quantitatively analyze and evaluate different information sources in this context? What is the value of data and how much data is enough?

The course covers multidisciplinary concepts required for conducting value of information analysis in multivariate and spatial models. Participants will gain an understanding for the integration of spatial statistical modeling and decision analysis for evaluating information gathering schemes. The value of information is computed before purchasing data, and can be useful for checking if data acquisition or processing is worth its price, or for comparing various experiments (active learning). The course will build a framework of perfect versus imperfect information, and total versus partial information where only a subset of the data is acquired or processed. The course uses slide presentations and runs hands-on projects on the computer (using R, Python or Matlab). Examples are used to demonstrate value of information analysis in various applications, including environmental sciences and petroleum. In these situations the decision maker could make better decisions by purchasing information (at a price) via for instance surveying, sensor usage or geophysical data.

Lecture 1: Decision analysis and the value of information

Lecture 2: Spatial statistics, design of experiments and value of information analysis

Lecture 3: Approximate computations for value of information analysis

(The course material uses 'Value of Information in the Earth Sciences', 2015, Eidsvik, Mukerji and Bhattacharjya, Cambridge Univ Press)

Hans-Rudolf Künsch

Ensemble data assimilation and particle filters

Data assimilation means sequential estimation and prediction of the state of a dynamical system, e.g. the earth's atmosphere or an animal population of some habitat, based on knowledge of the dynamics of the system and on partial and noisy observations as they become available. The term is used mainly in geophysical applications whereas in engineering the same problem is called filtering. In order to capture the uncertainty, prediction and estimation should be in the form of probability distributions. There is a general two-step recursion for the evolution of these distributions as new observations become available: In the propagation step, the filtering distribution which describes the knowledge about the current state is propagated forward according to the dynamics of the system to become the prediction distribution for the state at the next time step. In the update step, the information from the new observation is used to transform the prediction distribution into the next filtering distribution using Bayes theorem. Except in a few special cases, these recursions cannot be computed in closed form and one uses approximations by Monte Carlo samples instead. These samples are usually called ensembles and their members are called particles. The two main ensemble methods are the Ensemble Kalman Filter (EnKF) and the Particle Filter (PF). They share the same propagation step, but differ in the implementation of the update step.

In the first lecture, I will introduce the problem, the terminology and notation that I will use and then present the basic ideas and algorithms of both EnKF and PF.

The EnKF relies explicitly on linear measurements with Gaussian errors and implicitly also on a Gaussian prediction distribution. However, it performs surprisingly well in high dimensions. The PF on the other hand is in principle able to handle fully nonlinear dynamics and observations, but it collapses easily in high dimensions. A lot of effort has been made to improve the performance of the PF and to develop hybrid methods which combine features of the EnKF and the PF. In the second lecture, I will explain the collapse of the PF and discuss modifications of the PF and some of the hybrid methods.

In many applications the high dimension of the state arises from discretizing continuous functions in space. In this case, all methods need to be localized both for stability and efficient computation. Here, localization means that the update of the state at one location depends only on observations at locations nearby. In the third lecture I will explain the different variants for localizing the EnKF and then discuss the additional complications that arise for PF and hybrid methods. Finally, I will give an introduction to methods used to approximate the smoothing distribution, i.e. the distribution of the whole state evolution until the current time, and to methods for estimating unknown fixed parameters in the dynamics of the state or in the distribution of observation errors.

I plan to include a few tutorial exercies using the software R to allow participants to gain some intuitive understanding of how and when methods work in simple examples.

References:

P. Fearnhead, H. R. Künsch: Particle filters and data assimilation. Annual Review of Statistics and its Application 5 (2018), 421-449.

P. J. van Leeuwen, H. R. Künsch, L. Nerger, R. Potthast, S. Reich: Particle filters for applications in geosciences. Preprint, available at https://arxiv.org/abs/1807.10434

Kjetil Olsen Lye

In this session, we will cover uncertainty quantifications of parametrized partial differential equations (PDEs), where the parameters represent uncertainties in the system (for example initial position, heat conductivity, forcing terms, or geometry). Several problems coming from both application and theory can be formulated as a PDE of this form. We begin by surveying Monte Carlo methods for high-dimensional integrals of computationally expensive integrands, including Quasi-Monte Carlo and Multilevel Monte Carlo, and give a pragmatic overview of the theory. Time will be given to hands-on examples and exercises. In the end, we will apply the methods to hyperbolic conservation laws.

References / Software:

Please follow the installation instructions to install the required packages.

Lecturers

Hans R. Künsch is Professor Emeritus of Mathematics at ETH Zurich. He has been professor at ETH since 1983, and has been working with Spatial statistics and random fields, time series analysis, environmental modeling, and robust statistics and model selection. Ha has made significant contributions to these fields, and has 6500 citations to his works on Google Scholar.

Hans R. Künsch is Professor Emeritus of Mathematics at ETH Zurich. He has been professor at ETH since 1983, and has been working with Spatial statistics and random fields, time series analysis, environmental modeling, and robust statistics and model selection. Ha has made significant contributions to these fields, and has 6500 citations to his works on Google Scholar.

- Topics: Particle filters and nonlinear data assimilation

- Home page

Jo Eidsvik is Professor at NTNU and his research interests are spatio-temporal statistics and computational statistics, often applied to the Earth sciences. He completed his PhD in statistics at NTNU in 2003 and has recently published his book entitled Value of Information in the Earth Sciences together with his co-authors Tapan Mukerji (Stanford) and Debarun Bhattacharjya (IBM Research).

Jo Eidsvik is Professor at NTNU and his research interests are spatio-temporal statistics and computational statistics, often applied to the Earth sciences. He completed his PhD in statistics at NTNU in 2003 and has recently published his book entitled Value of Information in the Earth Sciences together with his co-authors Tapan Mukerji (Stanford) and Debarun Bhattacharjya (IBM Research).

- Topics: Value of information

- Home page

Remus G. Hanea is Professor II at the University of Stavanger and Specialist of Reservoir Technology at Equinor (previously Statoil). His research interests include Ensemble Kalman filtering, data assimilation, and inverse modeling. He completed his PhD in 2006 at TU Delft, and has been teaching the topic of data assimilation to students at PhD level for many years.

Remus G. Hanea is Professor II at the University of Stavanger and Specialist of Reservoir Technology at Equinor (previously Statoil). His research interests include Ensemble Kalman filtering, data assimilation, and inverse modeling. He completed his PhD in 2006 at TU Delft, and has been teaching the topic of data assimilation to students at PhD level for many years.

- Topics: Ensemble Kalman filtering and data assimilation

- Home page

Julien Brajard is Assistant Professor at Sorbonne University and currently a visiting scientist at NERSC - Nansen Environmental and Remote Sensing Center. He completed his PhD at the University of Pierre et Marie Curie in 2006 and his research interests include remote sensing, inverse modelling, machine learning and data assimilation. He has been working in the intersection of these themes in the context of physical oceanography.

- Topics: The interplay between physical models, data assimilation, and machine learning

- Home page

Kjetil Olsen Lye is a research scientist at SINTEF and currently completing in his PhD thesis at ETH Zurich. His PhD work has centered around uncertainty quantification and the statistical solution of partial differential equations. He has also been running massively parallel simulations on supercomputers with the Alsvid-UQ software, utilizing over 1000 GPUs for his numerical simulations.

Kjetil Olsen Lye is a research scientist at SINTEF and currently completing in his PhD thesis at ETH Zurich. His PhD work has centered around uncertainty quantification and the statistical solution of partial differential equations. He has also been running massively parallel simulations on supercomputers with the Alsvid-UQ software, utilizing over 1000 GPUs for his numerical simulations.

- Topics: Multilevel Monte carlo sampling and uncertainty quantification in Python

- Home page

André R. Brodtkorb is a research scientist at SINTEF and associate professor at Oslo Metropolitan university. He completed his PhD in scientific computing at the university of Oslo 2010, and his research interests are centered around applied mathematics and include numerical simulation, accelerated scientific computing, machine learning, and real-time scientific visualization.

André R. Brodtkorb is a research scientist at SINTEF and associate professor at Oslo Metropolitan university. He completed his PhD in scientific computing at the university of Oslo 2010, and his research interests are centered around applied mathematics and include numerical simulation, accelerated scientific computing, machine learning, and real-time scientific visualization.

- Topics: Multilevel Monte carlo sampling and uncertainty quantification in Python

- Home page

More to come soon.

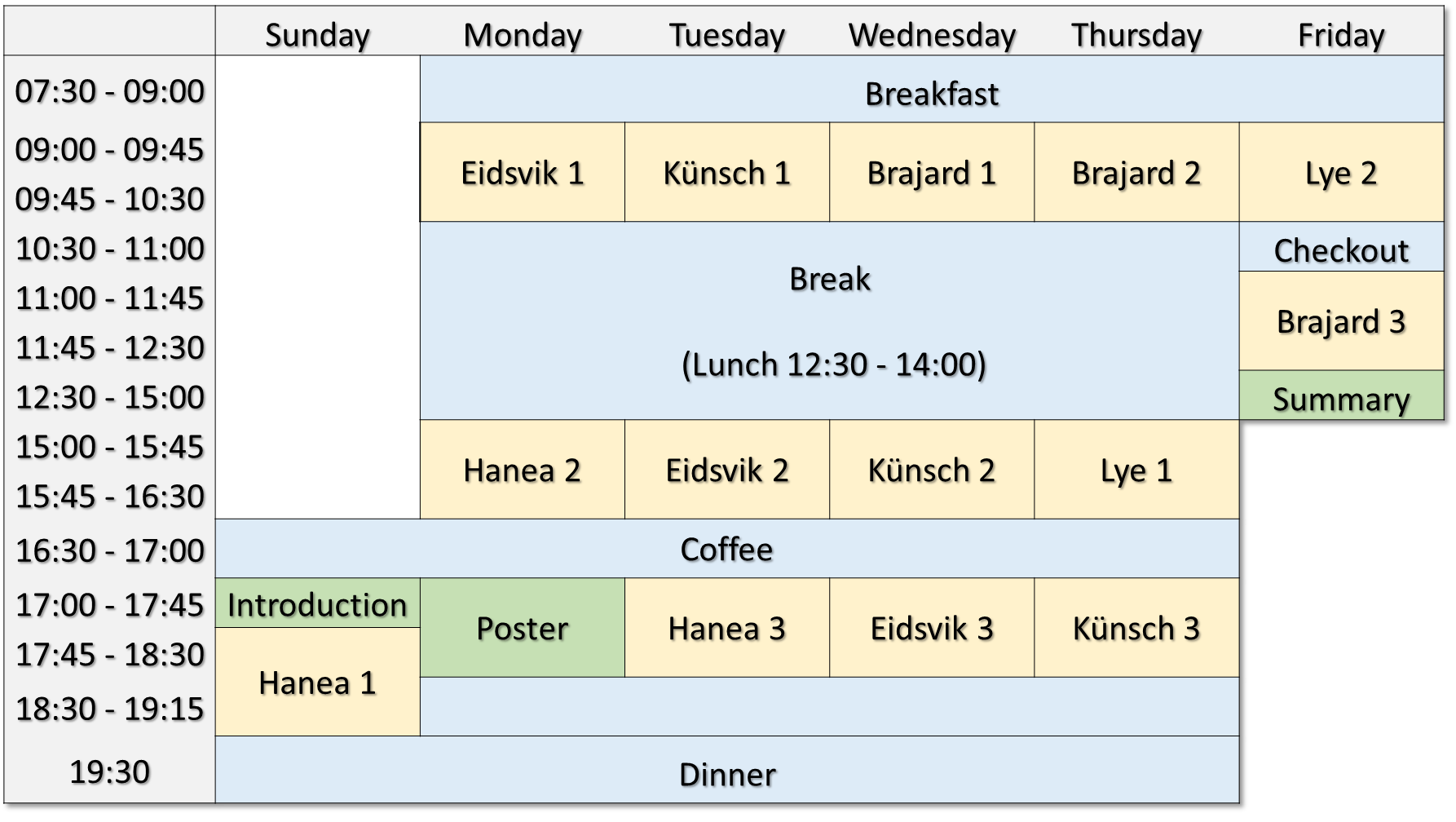

Schedule and lecture notes

The 2019 winter school starts on the afternoon of Sunday January 20th and ends on Friday January 25th after lunch. The school consists of 10-14 double lectures , and the typical daily schedule consists of breakfast until 9:00, lectures until 10:30, and then a long break from 10:30 to 15:00 that is well spent socializing and skiing. The afternoon consists of two double lectures separated by a coffee break. Additionally, there is a poster session scheduled for Tuesday afternoon. Participants eat a common dinner each evening. The schedule is shown in the timetable below, but please note that changes may occur.

Presentations

- Brodtkorb: Welcome, Summary, UQ in Python

- Jo Eidsvik: Lecture 1, Lecture 2, Lecture 3

- Hans R. Künsch: Lecture notes

- Kjetil Olsen Lye: Lecture 1, Lecture 2, Lecture 3

Posters

- Håvard Heitlo Holm, GPU Ocean: Efficient simplified ocean models and non-linear data assimilation

- Amjed Mohammed, Turbulent and financial time series analysis

- Elisa Rebolini, Gustav Baardsen, Audun Skau Hansen, Karl Roald Leikanger, and Thomas Bondo Pedersen, Pair cutoff determination based on subsets of simulation data points

- Tobias Herb, Daniel Thilo Schroeder, EXA - A distributed computation

environment - Fabio Zeiser et al., Stress testing the Oslo Method for heavy nuclei

- Cécile HAUTECOEUR and François GLINEUR, Nonnegative matrix factorization with polynomial signals via hierarchical alternating least squares

- Jean Rabault, Miroslav Kuchta & Atle Jensen, Discovering Active Flow Control strategies using Neural Networks and Deep Reinforcement Learning

- Kaja Kvello, UTILIZING DATA IN HEALTHCARE

- Sebastian Mitusch and Simon W. Funke, An algorithmic differentiation tool (not only) for FEniCS

- Ralf Schulze-Riegert, Michael Nwakile, Sergey Skripkin, James Baffoe, Dirk Geissenhoener, Olympus Challenge – Data Driven Optimization for Field Development Plan and Competition from Domain Knowledge

- Lars Frogner, Helle Bakke and Boris Vilhelm Gudiksen, Simulating nanoflares

in the solar atmosphere - Yingguang Chu, Digital Twinsfor VesselLife CycleService (DigiTwinship)

- Xiaokang Zhang, Inge Jonassen, EFSIS: Ensemble Feature Selection Integrating Stability

- Nico Reissmann, Helge Bahmann, Jan Christian Meyer, Magnus Själander, Compiling with the Regionalized Value State Dependence Graph

- Alexander J Stasik, Espen Hagen, Jan-Eirik W Skaar, Torbjørn V Ness, Gaute T Einevoll, Estimating parameters of biological neuronal networks using Convolutional Neural Networks

- Snorri Ingvarsson and Movaffaq Kateb, Structural properties and static- and dynamic magnetization in soft ferromagnetic materials

- Aya Saad, Autonomous Imaging and Learning Ai Robot identifying planktON taxa in-situ

Important information

Applying for the winter school

There is no registration fee for the winter school, but participants must cover their own travel costs and hotel costs at Dr. Holms. You only have to register on the registration page.

Cost of participating

There is no registration fee for the winter school, but participants must cover their own travel costs and hotel costs at Dr. Holms.

Room allocation

The winter school has a limited number of rooms at Dr. Holms which will be reserved on a first come first served basis. We have in the previous years exceeded our room allocation, so please register as early as possible!

Posters

The winter school welcomes all posters to be presented. The aim of the poster session is to make new contacts and share your research, and it is an informal event. You need to indicate in your registration if you want to present a poster during the poster session. Please limit your poster to A0 in portrait orientation.

Important information and links

- Registration page

- Registration deadline: See registration page

Participants

| Name | Field of research: | Affiliation / Home institution: | Email Address |

|---|---|---|---|

| Samaneh Abolpour Mofrad | Mathematics | Western Norway University of Applied Sciences | sam-hjå-hvl.no |

| Faiga M. Alawad | Informatics | NTNU | faiga.alawad-hjå-ntnu.no |

| Guttorm Alendal | Mathematics | University of Bergen | guttorm.alendal-hjå-uib.no |

| Vaneeda Allken | Informatics | Institute of Marine Research | vaneeda-hjå-gmail.com |

| Mona Anderssen | Physics | University of Oslo | mona.anderssen.93-hjå-gmail.com |

| Jimmy Aronsson | Mathematics | Chalmers University of Technology | jimmyar-hjå-chalmers.se |

| Dina Margrethe Aspen | Decision analysis | NTNU | dina.aspen-hjå-ntnu.no |

| Gustav Baardsen | Physics | University of Oslo | gustav.baardsen-hjå-gmail.com |

| Olaf Trygve Berglihn | Chemistry | SINTEF Industri | olaf.trygve.berglihn-hjå-sintef.no |

| Sabuj Chandra Bhowmick | Statistics | NTNU | sabuj606-hjå-gmail.com |

| Øivind Josefsen Birkeland | Informatics | Western Norway University of Applied Sciences | oivind.birk-hjå-gmail.com |

| Simon Blindheim | HPC | NTNU | simon.blindheim-hjå-ntnu.no |

| Eivind Eigil Bøhn | Mathematics | SINTEF | eivind.bohn-hjå-gmail.com |

| Anna-Lena Both | Mathematics | University of Bergen / Gexcon AS | anna-lena.both-hjå-gexcon.com |

| Julien Brajard | Informatics | Sorbonne Université / NERSC | julien.brajard-hjå-nersc.no |

| andreas brandsæter | Statistics | DNV GL / University of Oslo | andreas.brandsaeter-hjå-dnvgl.com |

| André Rigland Brodtkorb | Informatics | SINTEF Digital / OsloMet | Andre.Brodtkorb-hjå-sintef.no |

| Nam Bui | Informatics | Oslo Metropolitan University | nam.bui-hjå-oslomet.no |

| Lubomir Bures | CFD | EPFL, Lausanne | lubomir.bures-hjå-epfl.ch |

| Kurdistan Chawshin | Petroleum Geoscience | NTNU | kurdistan.chawshin-hjå-ntnu.no |

| Yingguang Chu | Marine Enginerring | Sintef Ålesund AS | yingguang.chu-hjå-sintef.no |

| Frederik Ferrold Clemmensen | Physics | University of Oslo | frederik.clemmensen-hjå-astro.uio.no |

| Wouter Jacob de Bruin | Machine learning | Equinor | wbr-hjå-equinor.com |

| Lene Norderhaug Drøsdal | Data science | Nextbridge | lnd-hjå-nextbridge.no |

| Thore Egeland | Statistics | NMBU | Thore.Egeland-hjå-nmbu.no |

| Jo Eidsvik | Statistics | NTNU | jo.eidsvik-hjå-ntnu.no |

| Shirin Fallahi | Mathematics | University of Bergen | shirin.fallahi-hjå-uib.no |

| Petra Filkukova | Psychology | Simula Research Laboratory | petrafilkukova-hjå-simula.no |

| Otávio Fonseca Ivo | Mathematics | NTNU | fonseca.i.otavio-hjå-ntnu.no |

| Lars Frogner | Physics | University of Oslo | lars.frogner-hjå-astro.uio.no |

| Håvard G. Frøysa | Mathematics | University of Bergen | havard.froysa-hjå-uib.no |

| Maryam Ghaffari | Physics | University of Bergen | Maryam.Ghaffari-hjå-uib.no |

| Florian Grabi | Embedded Computing | Fraunhofer IPA | florian.grabi-hjå-ipa.fraunhofer.de |

| Paulius Gradeckas | Statistics | PM Office of Republic of Lithuania | paulius.gradeckas-hjå-gmail.com |

| Stig Grafsrønningen | Mathematics | NextBridge Analytics | sgr-hjå-nextbridge.no |

| Odin Gramstad | Mathematics | DNV GL | Odin.Gramstad-hjå-dnvgl.com |

| Thomas Andre Larsen Greiner | Geophysics | University of Oslo / Lundin | thomas-larsen.greiner-hjå-lundin-norway.no |

| Morten Grøva | Physics | SINTEF Ocean | morten.grova-hjå-sintef.no |

| Arnt Grøver | Physics | SINTEF Industry | arnt.grover-hjå-sintef.no |

| Kristian Gundersen | Statistics | University of Bergen | Kristian.Gundersen-hjå-uib.no |

| Halvor Snersrud Gustad | Mathematics | NTNU / TechnipFMC | halvorsg-hjå-stud.ntnu.no |

| Espen Hagen | Physics | University of Oslo | espehage-hjå-fys.uio.no |

| Yvon Halbwachs | Informatics | Kalkulo AS | yvh-hjå-kalkulo.no |

| Valentin Hamaide | Mathematics | UCLouvain | valentin.hamaide-hjå-uclouvain.be |

| Nils Olav Handegard | Biology | Havforskingsinstituttet | nilsolav-hjå-hi.no |

| Remus Hanea | Mathematics | Equinor / University of Stavanger | rhane-hjå-equinor.com |

| Cécile Andrea Hautecoeur | Mathematics | UCLouvain | cecile.hautecoeur-hjå-uclouvain.be |

| Håvard Heitlo Heitlo Holm | HPC | SINTEF Digital | havard.heitlo.holm-hjå-sintef.no |

| Magnus Holm | Informatics | University of Oslo | judofyr-hjå-gmail.com |

| Ivar Thokle Hovden | Physics | Oslo University Hospital | ivarth-hjå-student.matnat.uio.no |

| Kimberly Huppert | Geoscience | GFZ German Research Centre for Geosciences | khuppert-hjå-gfz-potsdam.de |

| Snorri Ingvarsson | Physics | University of Iceland | sthi-hjå-hi.is |

| Vijayasarathi Janardhanam | Mathematics | Heidelberg University | vijayasarathi.janardhanam-hjå-h-its.org |

| Alejandra Jayme | Mathematics | Universität Heidelberg | alejandra.jayme-hjå-h-its.org |

| Pål Vegard Johnsen | Statistics | SINTEF Digital / NTNU | paal.v.johnsen-hjå-gmail.com |

| Mahmood Jokar | Mathematics | Western Norway University of Applied Science | jmah-hjå-hvl.no |

| Vegard Kamsvåg | Cybernetics | DNV GL | vegardkamsvaag-hjå-gmail.com |

| Runhild Aae Klausen | Mathematics | FFI | runhild.klausen-hjå-gmail.com |

| Knut Erik Knutsen | Sensor data | DNV GL | knut.erik.knutsen-hjå-dnvgl.com |

| Makan KONTE | Informatics | ISIM BUSINESS SCHOOL | mkonte2-hjå-hotmail.fr |

| Frank Alexander Kraemer | Informatics | NTNU | kraemer-hjå-ntnu.no |

| Michael Kraetzschmar | Mathematics | Flensburg University of Applied Sciences | kraetzschmar-hjå-mathematik.fh-flensburg.de |

| Swetlana Kraetzschmar | Informatics | Flensburg University of Applied Sciences | swetlana.puschkarewskaja-kraetzschmar-hjå-fh-flensburg.de |

| Brage Strand Kristoffersen | HPC | NTNU | brage.s.kristoffersen-hjå-ntnu.no |

| Hans Rudolf Künsch | Statistics | ETH Zurich | kuensch-hjå-stat.math.ethz.ch |

| Kaja Kvello | Cybernetics | DNV GL | kaja.kvello-hjå-dnvgl.com |

| Vegard Berg Kvernelv | Mathematics | Norsk Regnesentral | vegard.kvernelv-hjå-nr.no |

| Johannes Langguth | HPC | Simula | langguth-hjå-simula.no |

| Oda Kristin Berg Langrekken | Physics | University of Oslo | o.k.b.labgrekken-hjå-fys.uio.no |

| Marco Leonardi | Informatics | NTNU | marco.leonardi-hjå-ntnu.no |

| Nicolas Limare | Data science | Bloomberg | nlimare-hjå-bloomberg.net |

| Kjetil Olsen Lye | Mathematics | ETH Zurich | kjetil.lye-hjå-sam.math.ethz.ch |

| Ketil Malde | Informatics | Institute of Marine Research | ketil.malde-hjå-imr.no |

| Simon Millerjord | Physics | University of Oslo | simonmillerjord-hjå-gmail.com |

| Sebastian Kenji Mitusch | Mathematics | Simula Research Laboratory | sebastkm-hjå-simula.no |

| Hugo Goncalo Antunes Moreira | Mathematics | Universitet i Bergen | hugo.moreira-hjå-student.uib.no |

| Fredrik Nevjen | Statistics | NTNU | fnevjen-hjå-gmail.com |

| Jun Nie | Aerodynamics | TU Delft | j.nie-hjå-tudelft.nl |

| Sotirios Nikas | Informatics | Heidelberg University | sotirios.nikas-hjå-h-its.org |

| Anna Oleynik | Mathematics | University of Bergen | anna.oleynik-hjå-uib.no |

| Viktor Olsbo | Statistics | Chalmers | viktor.olsbo-hjå-gmail.com |

| Rising John Osazuwa | Informatics | University of Ibadan, Nigeria | risingosazuwa-hjå-gmail.com |

| Bogdan Osyka | Mathematics | Rystad Energy | bogdan.osyka90-hjå-gmail.com |

| Andrew John Pensoneault | Mathematics | University of Iowa | andrew-pensoneault-hjå-uiowa.edu |

| Helén Persson | Physics | University of Oslo | helen.persson91-hjå-gmail.com |

| Long Pham | Informatics | NTNU | phamminhlong-hjå-gmail.com |

| Armin Pobitzer | Mathematics | SINTEF Ålesund | armin.pobitzer-hjå-sintef.no |

| Alexey Prikhodko | Mathematics | Novosibirsk state university | Prikhodko1997-hjå-gmail.com |

| Jean Rabault | Physics | University of Oslo | jean.rblt-hjå-gmail.com |

| Sonali Ramesh | HPC | Dr. Mgr educational and research institute | sonaliramesh03-hjå-gmail.com |

| Nico Reissmann | HPC | NTNU | nico.reissmann-hjå-ntnu.no |

| Filippo Remonato | Machine Learning | SINTEF | filippo.remonato-hjå-sintef.no |

| Signe Riemer-Sørensen | Data Analytics / AI | SINTEF Digital | signe.riemer-sorensen-hjå-sintef.no |

| Rebecca Anne Robinson | Physics | University of Iceland | becca.anne.robinson-hjå-gmail.com |

| Susanna Röblitz | Mathematics | University of Bergen | Susanna.Roblitz-hjå-uib.no |

| Thomas Röblitz | HPC | USIT / University of Oslo | thomas.roblitz-hjå-usit.uio.no |

| Anouar Romdhane | Physics | SINTEF Industry | anouar.romdhane-hjå-sintef.no |

| Hallvard Røyrane-Løtvedt | Statistics | Tryg forsikring | lothal-hjå-tryg.no |

| Yevgen Ryeznik | Statistics | Uppsala University | yevgen.ryeznik-hjå-math.uu.se |

| Aya Hassan Saad | Informatics | NTNU | aya.saad-hjå-ntnu.no |

| Martin Lilleeng Sætra | Informatics | Norwegian Meteorological Institute | martinls-hjå-met.no |

| Brandon Kawika Sai | Optimization | Fraunhofer IPA | brandon.sai-hjå-ipa.fraunhofer.de |

| Amali Sakthi | HPC | Dr. Mgr educational and research institute | amali1021998-hjå-gmail.com |

| Ralf Schulze-Riegert | Physics | Schlumberger | RSchulze-Riegert-hjå-slb.com |

| Abhishek Shah | Mathematics | NERSC | abhishek.nit07-hjå-gmail.com |

| Ashok Sharma | Informatics | University Jalandhar Phagwara India | drashoksharma-hjå-hotmail.co.in |

| Jorge Armando Sicacha Parada | Statistics | NTNU | jorge.sicacha-hjå-ntnu.no |

| Thiago Lima Silva | Optimization | NTNU | thiago.l.silva-hjå-ntnu.no |

| PARVEEN SINGH | Informatics | JAMMU UNIVERSITY | imparveen-hjå-yahoo.com |

| Audun Skau Hansen | Chemistry | UiO | a.s.hansen-hjå-kjemi.uio.no |

| Kusti Skytén | Statistics | University of Oslo | k.o.skyten-hjå-medisin.uio.no |

| Anders D. Sleire | Statistics | Tryg Insurance / Univeristy of Bergen | sleand-hjå-tryg.no |

| Anders Christiansen Sørby | Statistics | NTNU | user8715-hjå-gmail.com |

| Michail Spitieris | Statistics | NTNU | michail.spitieris-hjå-ntnu.no |

| Alexander Johannes Stasik | Statistics | University of Oslo | a.j.stasik-hjå-fys.uio.no |

| Christer Steinfinsbø | Informatics | UiB / HVL | cst027-hjå-uib.no |

| Andrea Storås | Farmacy | Universitetet i Oslo | atstoraa-hjå-student.farmasi.uio.no |

| Henrik Sperre Sundklakk | Mathematics | NTNU | henrik.s.sundklakk-hjå-ntnu.no |

| Amine tadjer | Statistics | UiS | amine.tadjer-hjå-uis.no |

| Gunnar Taraldsen | Statistics | NTNU | gunnar.taraldsen-hjå-ntnu.no |

| Owen Matthew Truscott Thomas | Statistics | University of Oslo | o.m.t.thomas-hjå-medisin.uio.no |

| Tomas Torgrimsby | Mathematics | Universitetet i Oslo | torgrimsby-hjå-gmail.com |

| Veronica Alejandra Torres | AI / Geophysics | NTNU | veronica.a.t.caceres-hjå-ntnu.no |

| Jon Gustav Vabø | Mathematics | Equinor | jgv-hjå-equinor.com |

| Silius Mortensønn Vandeskog | Statistics | NTNU | siliusv-hjå-gmail.com |

| Sriram Venkatesan | Physics | Paul Scherrer Institut | sriram.venkatesan-hjå-psi.ch |

| Victor Wattin Håkansson | Statistics | NTNU | victor.haakansson-hjå-ntnu.no |

| Fabio Zeiser | Physics | UiO | fabiobz-hjå-fys.uio.no |

| Xiaokang Zhang | Informatics | University of Bergen | xiaokang.zhang-hjå-uib.no |

| Anders Christiansen Sørby | Statistics | NTNU | user8715-hjå-gmail.com |

| Otávio Fonseca Ivo | Mathematics | NTNU | fonseca.i.otavio-hjå-ntnu.no |

| Stig Grafsrønningen | Mathematics | NextBridge Analytics | sgr-hjå-nextbridge.no |

| Long Pham | Informatics | NTNU | phamminhlong-hjå-gmail.com |

| Mira Lilleholt Vik | Statistics | NTNU | miralilleholtvik-hjå-gmail.com |

| Julie Johanne Uv | Statistics | NTNU | julieju-hjå-stud.ntnu.no |

| Amjed Mohammed | Physics | amjed.mohammed-hjå-uni-oldenburg.de | |

| Daniel Thilo Schroeder | Informatics | Simula, TU Berlin | daniels-hjå-simula.no |