Machine learning for physics-based modeling

Contact persons

Purely data-driven models often struggle to generalize beyond their training data—especially in complex systems where observations are sparse or noisy. Physics-based models, grounded in fundamental laws, offer strong generalization but can be computationally expensive or rely on simplifications. Hybrid modeling techniques combine these approaches to produce models that are both accurate and efficient. This is particularly valuable for subsurface systems, where direct measurements are limited and uncertainty is high.

What do we do?

We develop hybrid modeling methods that integrate physical insight with machine learning to create fast, accurate flow models for complex systems. Our primary focus is on subsurface applications—including hydrocarbon recovery, CO₂ storage, geothermal energy, and gas storage—where uncertainty is high and data availability is limited.

🧠 Treating the simulator as a neural network |

||

|---|---|---|

|

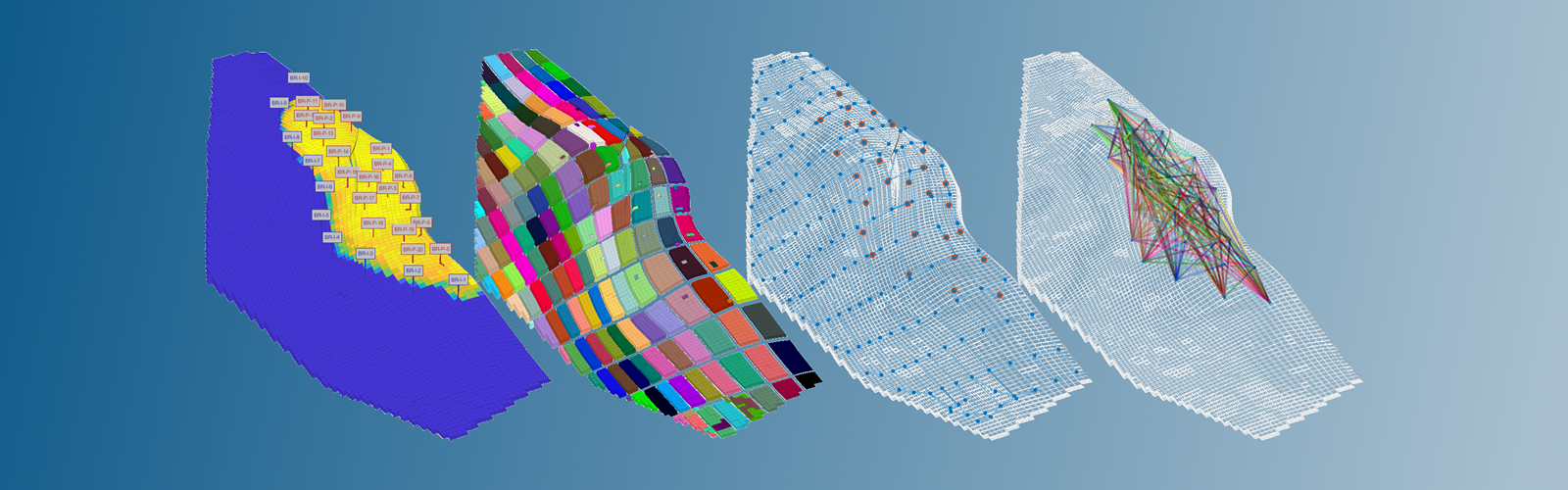

In our CGNet approach, we use a fully differentiable flow simulator as a trainable model—conceptually analogous to a neural network. The model architecture is defined by the computational graph induced by a finite-volume discretization over a coarse grid (or a coarse partition of a more accurate grid), where physical properties such as pore volumes and transmissibilities act as tunable weights. These are calibrated using gradient-based optimization, with automatic differentiation and adjoint methods providing the equivalent of backpropagation. CGNet can be trained to match observed field data or to emulate high-fidelity simulation output—offering rapid, physics-consistent predictions at reduced computational cost. |

|

|

|

||

🔁 Other hybrid flow models |

||

|

CGNet is one of several hybrid approaches we develop. We also work with numerical interwell network models like GPSNet, FlowNet, and StellNet, which use simplified flow networks trained on field or simulation data to predict reservoir behavior quickly. For unconventional resources such as shale oil, we use calibrated 1D models to predict production from hydraulically fractured, ultra-low-permeability systems, capturing fracture–matrix interactions and transient flow dynamics. |

|

|

|

||

📚 Standard machine learning approaches |

||

|

We have experience applying established techniques like Physics-Informed Neural Networks (PINNs), Fourier Neural Operators (FNOs), Pseudo-Hamiltonian Neural Networks (PHNN), and other physics-aware architectures. These methods can be valuable when parts of the physics are unknown or when full-scale simulation is too computationally expensive. In addition, we have developed novel and fast surrogate models for estimating observables from simulators by combining machine learning with state-of-the-art uncertainty quantification techniques. These surrogate models enable efficient gradient-based optimization even on black-box simulators. By utilizing multi-fidelity data for training, we achieve better accuracy for the same computational cost. |

|

|

|

||

🧩 System identification and inverse modeling |

||

|

We explore how to learn governing equations directly from data, including system identification for non-linear PDEs. Our preliminary tests enforce known physical constraints by altering assumptions on the learned Hamiltonian, yielding interpretable and physically plausible models. We are also developing methods to embed neural networks implicitly into simulators, enabling solutions to inverse problems by combining differentiable simulators, functional approximations (e.g., neural networks), and a priori constraints. |

|

|

|

||

🔄 Neural networks as correctors |

||

|

We are currently exploring predictor–corrector hybrid frameworks, where a calibrated simulator or a physics-based model like CGNet serves as the predictor and a neural network acts as the corrector. The predictor enforces core physical principles, while the corrector improves accuracy by learning from mismatches with observed data. This approach preserves physical consistency while improving prediction fidelity. |

|

|

🌍Supporting physics-informed digital twins |

||

|

Our hybrid models form a foundation for building digital twins of subsurface systems—dynamic, real-time simulations that integrate operational data and sensor inputs. These digital twins are designed to improve understanding of complex processes and support more effective decision-making throughout the asset lifecycle. By combining fast surrogate models with continuous data updates, they enable forecasting, monitoring, and optimization under uncertainty. Much of our work in this area has focused on geothermal energy systems, where real-time modeling and data integration are essential for efficient resource management, performance prediction, and operational planning. |

|

|