Simulation of data enrichment pipelines

Contact person

Keywords: Big data pipelines, Data enrichment, Software containers, Testing, Simulation

These pipelines are essential for efficiently processing data, but they are complex and dynamic in nature. They involve multiple data processing steps that are executed on diverse computing resources within the Edge-Cloud paradigm.

To accurately represent this complexity, testing and simulating big data pipelines on heterogeneous resources is necessary. However, due to the resource-intensive nature of big data processing, relying on historical execution data for testing and simulation purposes becomes impractical, and a different approach is needed.

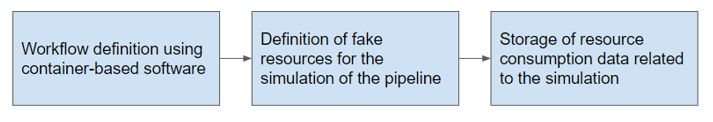

A new approach is based on the dry running of big data pipelines step by step in an isolated environment using container-based technologies. Dry running of big data pipelines is defined as the execution of a pipeline using a sample or smaller input data size in a test environment, as opposed to using the infrastructure for production deployment. The whole architecture is described in the paper: https://ieeexplore.ieee.org/document/9842538.

Tech stack: Kubernetes as a software-container orchestrator, Argo Workflows as a workflow manager, and KWOK (Kubernetes WithOut Kubelet) as a simulator of resources.

Work to be done:

- Application of the proposed simulator in the context of data enrichment pipelines for testing their scalability properties, both horizontal and vertical ones.

- Identification of possible bottlenecks and improvements in any step of the pipelines using statistical and analytical methods. Data collected from each simulation (e.g., CPU, memory, and disk usage) can be used to estimate the infrastructure resources required for scaling the pipeline in a production environment.

- Data enrichment in streams, including real-time sensor data from measurement streams and text data streams. Data streaming pipelines require the definition of specific architectures, like Lambda and Kappa, to deal with the high velocity and volatility of data. For example, different measures from the same sensor and measures from different sensors can be combined as multivariate time series, or news articles can be grouped into events and thus, news can be organized as a stream of events.