Self-learning from first person video for robotic automation of tasks

Master Project

For autonomous robots to function in the real world, artificial intelligence is not sufficient; they also need reliable sensors to see in changing conditions, and robust control of a physical robot platform.

To achieve such physical intelligence, new research is needed in the cross section between sensor design, machine learning and robot control.

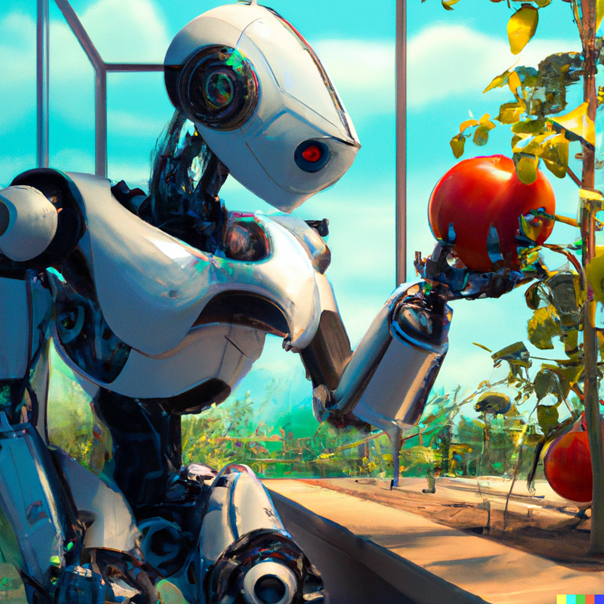

Agricultural robotics is a particularly demanding application, with its large seasonal variations, and high diversity in crops and environment. Today, there are a lot of work-intensive agricultural operations that must be done manually by seasonal workers, for instance pruning, weeding and picking. These tasks are very difficult to automate and requires technology that is beyond state-of-the-art in robotics, computer vision and sensors.

The scenes are visually very complex, and as with all real-world tasks – labeled training data is very scarce. Some of the tasks one wishes to automate are also based on hard to define concepts such as "experience" and "intuition". The ROBOFARMER project will capture first person video and eye-tracking data streams from human workers performing plant interactions, and leverage this data to develop self-learning.

Research Topic Focus

The master project will use commercially available eye tracking glasses, and collect data of humans doing everyday tasks (to facilitate easy data collection). By using eye-tracking as a signal and the position of hands and head as a target, the hypothesis is that a self-learning algorithm can be built that can reliably predict actions based on eye tracking only.

The thesis will have an element of hands-on/hardware interaction as is common with real-world applications, and will cover the integration and with eye tracking glasses, and first-person camera, as well as applying a hand-tracking algorithm on the video streams.

Expected Results and Learning Outcome

- Definition of an appropriate deep learning model for self-supervised learning

- Software prototype

- Evaluation of prototype in real business use cases

- Publication

Recommended Prerequisites

Experience with visual processing using deep learning models will be considered an advantage. Experience/courses in machine learning, deep learning and computer vision. Familiarity with Python and DL frameworks (Pytorch preferred).

References

- Wang, S., Ouyang, X., Liu, T., Wang, Q., & Shen, D. (2022). Follow My Eye: Using Gaze to Supervise Computer-Aided Diagnosis. IEEE Transactions on Medical Imaging.

- Shafiullah, N. M. M., Paxton, C., Pinto, L., Chintala, S., & Szlam, A. (2022). CLIP-Fields: Weakly Supervised Semantic Fields for Robotic Memory. arXiv preprint arXiv:2210.05663.

The result will be used in the research project ROBOFARMER