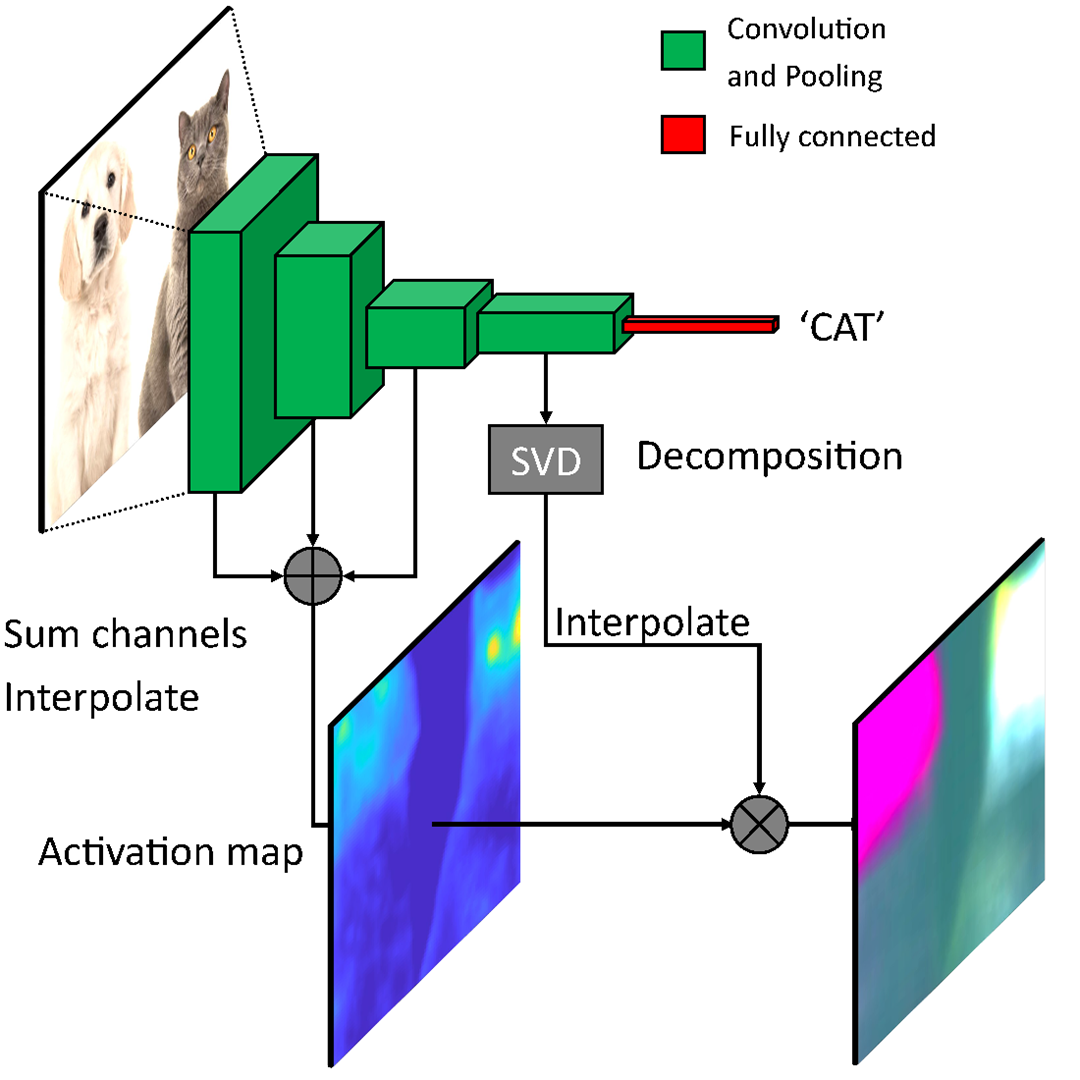

It uses a single forward pass of the original network to map principal features from the final convolutional layer to the original image space as RGB channels. By working on a batch of images we can extract contrasting features, not just the most dominant ones with respect to the classification. This allows us to differentiate between several features in one image in an unsupervised manner. This enables us to assess the feasibility of transfer learning and to debug a pre-trained classifier by localising misleading or missing features.

Principal feature visualization is a visualization technique for convolutional neural networks that highlights the contrasting features in a batch of images. It produces one RGB heatmap per input image.

One of the main issues with bottleneck networks is that they provide no visual output, that is, it is not possible to know what part of the image contributed to the decision. As a consequence, there is a demand for methods that can help visualise or explain the decision-making process of such networks and make it understandable for humans.

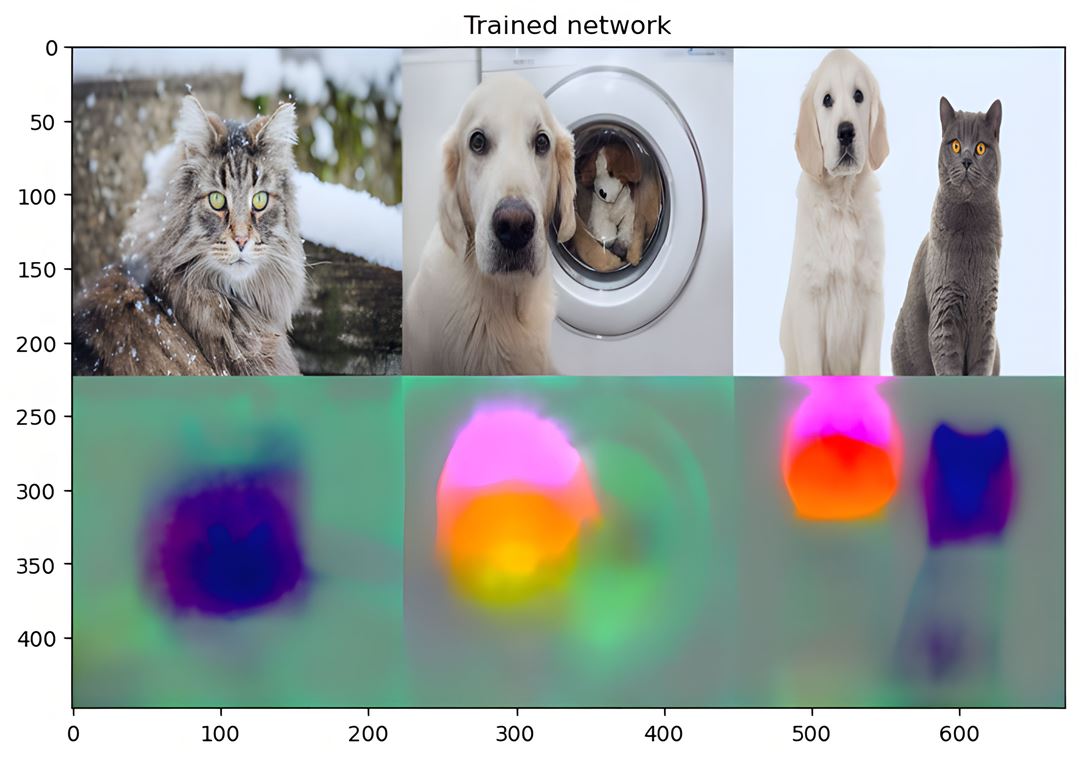

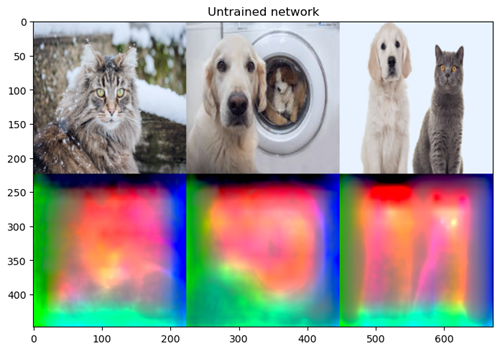

PFV is by design a lightweight contrastive visualization, which means that it will highlight the most "important" pixels used for the network output decision (e.g., classification, regression etc). In object classification networks the application is obvious. Illustrated in Figure 1 and Figure 2 is the typical output of this method on a trained and untrained (random) classification network. The output is clearly human-readable and will provide useful clues for debugging and understanding the network.

But an untrained (re-initialized) network shows scrambled output, as expected, since no neurons are able to provide interesting information for the classification task.

[1] Bakken, Marianne, et al. "Principal Feature Visualisation in Convolutional Neural Networks." European Conference on Computer Vision. Springer, Cham, 2020.

[2] Source code: https://github.com/SINTEF/PFV

Figure at the top: A trained network shows good localization, colors are similar for objects of same class - indicating that the same pattern of neurons were used.